2021. 4. 8. 15:51ㆍ[Paper Review]

pdf : arxiv.org/pdf/1912.03874.pdf

Input : 3D lidar point cloud

output : point-wise classification (noise/de-noise)

논문 citation : R. Heinzler, F. Piewak, P. Schindler and W. Stork, "CNN-Based Lidar Point Cloud De-Noising in Adverse Weather," in IEEE Robotics and Automation Letters, vol. 5, no. 2, pp. 2514-2521, April 2020, doi: 10.1109/LRA.2020.2972865.

summary :

2D convolution을 활용하여 3d point cloud를 입력받아 각 point가 날씨로 인한 noise인지 아닌지 판단하는 네트워크

네트워크는 LiLaNet을 조금 변형하여 구성

본 논문에서는 두 가지 데이터세트를 활용

(climate chamber에서 취득한 정적인 상태의 데이터 &

주행에서 얻은 맑은 날씨 데이터에 weather augmentation 적용한 데이터)

words

To that extent,~~ 그만큼, 그정도로

anti-aliasing / aliasing:컴퓨터 그래픽에서 해상도의 한계로 선 등이 우둘투둘하게 되는 현상

spatial vicinity : 공간적 근접

Contents:

Intro

1. 거리 데이터는 매우 심하게 손상된다.

measurements are highly impaired by fog, dust, snow, rain, pollution, and smog [1]–[6].

2. back-scattered 현상을 보인다.

Such conditions cause erroneous point measurements in the point cloud data which arise from the reception of back-scattered light from water drops (e.g rain or fog) or arbitrary particles in the air (e.g. smog or dust)

3.cnn 기반 연구들이 noisy measurement 를 없애려고 연구됨

CNN-based lidar perception algorithms might be better able to cope with such issues given their learning capacity thereby reducing the need for an explicit handling of noisy measurements.

4.그리하여 pre-processing step 에서 많은 연구가 이뤄짐

This has sparked a large body of research on algorithms to detect and handle noisy point cloud measurements in a pre-processing step before applying perception algorithms.

관련연구

1. 3d point로 noise point discard 하는 연구

De-noising algorithms in 3D space are often based on spatial features to discard noise points caused by rain or snow [4], [15].

1.번 연구에서의 단점 : 가까이 있는거를 잘 거르거지만 / 멀리있는 작은 물체들도 noise 로 취급함

As these techniques are discarding points based on the absence of points in their vicinity, smaller objects at medium to large distances might be falsely suppressed and marked as noise.

2. 한 포인트씩 잡을 필요는 없지만 clutter 가 안잡혀서 부근의 데이터가 줄어든다.

Fig. 1 indicate that modern lidar sensors, e.g. the Velodyne VLP32C, do not necessarily perceive drops of water from fog or rain as a single point, but often as multi-point clutter in the near to mid range which significantly reduces the applicability of filtering based on spatial vicinity only.

3. 리얼 월드 시나리오에서 악의환경속에서 테스트는 gt data 가 없어 힘들다. 그러나 우리는 해봤다.

Experimentally validating such filtering algorithms in realworld scenarios under adverse weather conditions is very challenging due to the lack of proper ground truth. / Experimentally validating such filtering algorithms in realworld scenarios under adverse weather conditions is very challenging due to the lack of proper ground truth.

4. 그리고 data augmentation 방법으로 실제 좋은 날씨의 데이터를 adverse weather effect 주는것을 사용했다.

we employ a data augmentation approach to emulate adverse weather effects on real-world data that has been previously obtained in good weather conditions [16].

Contribution

1. cnn 기반 denosing 을 처음했고 속도가 효율적

The first CNN-based approach to lidar point cloud arXiv:1912.03874v2 [cs.CV] 12 Feb 2020 de-noising with a significant performance boost over previous state-of-the-art while being very efficient at the same time

2. data augmentation 을 통해 실제 악의상황과 비슷하게 만듬

A data augmentation approach for adding realistic weather effects to lidar point cloud data

3. 질적으로 각 포인트에 대한 레벨을 발전시켯고(labeling) 제어되는 환경에서 여러 환경을 구현

A quantitative and qualitative point-level evaluation of de-noising algorithms in controlled environments under different weather conditions.

관련연구 2

1. lidar sensor 에 극악환경이 영향을 많이 끼친다.

Adverse weather conditions such as fog, rain, dust or snow have a huge impact on the perception of lidar sensors, as shown in [1]–[3], [5], [17]–[23].

2. 많은 데이터가 좋은 날시에서 데이터다.

Most state-of-the-art data sets are recorded mostly under favorable weather conditions only (e.g. [25]–[27]).

연구 A : dense point cloud denosing

1. 2d depth image de-noising 연구는 dense depth 정보를 stereo cam, depth cam 에서 받아서 진행됨.

Previous work on 2D depth image de-noising is mainly based on dense depth information obtained by stereo vision and depth cameras (e.g. Intel RealSense, Microsoft Kinect, etc.) [13], [28].

2. 이 방법은 3가지로 나눠진다

These approaches can be split in three different categories: (1) spatial, (2) statistical and (3) segmentation-based methods.

3. spatial 방법을 얘기함 : 주변 pixel value의 평균을 구하고, observed pixel 로 부터 거리가 멀어짐으로 인해 weight decrease 한다.

Spatial smoothing filters (1), e.g. the Gaussian low pass filter, calculate a weighted average of pixel values in the vicinity, where the weight decreases with the distance to the observed pixel. Points are smoothed by increasing distance from the derived weight [8].

4. 눈에 의해 corrupted 된 데이터가 훌륭히 필터됨

For de-noising 2D point cloud data corrupted by snow, these filter types are providing successful results, as shown by [9] with a median filter.

5. neighborhood 만 variation 이 있다고 가정하면 edges 를 보존하는데 실패한다. / 그리하여 bilateral filter 가 소개됨

For de-noising 2D point cloud data corrupted by snow, these filter types are providing successful results, as shown by [9] with a median filter.

The bilateral filter, introduced by [8] for gray and color images, is replacing traditional low-pass filtering by providing an edge preserving smoothing filter for dense depth images [10].

6. 두번째 확률적 필터

Statistical filter methods (2) for dense point cloud denoising are often based on maximum likelihood estimation [29] or Bayesian statistics [11]. By optimizing the decision whether a points lies on a surface or not these approaches are smoothing surfaces and remove minor sensor errors.

7. 세번째 segment-based filter : 필터링 하기전에 segmentation 을 함으로써, seg-based filter 들은 로컬 세그멘트의 포인트클라우드만(정확한 라벨 된것들) 스무딩한다. 그리하여 corner 들이나 질좋은 구조들이 잘 보존된다. [12][13][14] 들이 segmentation 에 사용되고, filateral filter를 local segment 스무딩에 사용된다.

segmentbased filters (3) are smoothing only local segments of point clouds with identical labels. Therefore corners and finer structures are better preserved. Region growing [12], a maximum a-posteriori estimator [13] or edge detection [14] is used for segmentation, while bilateral filters are used for smoothing local segments.

8. 거리가 먼 라이다 데이터는 카메라보다 데이터 밀도가 떨어진다. [4] 번 연구 처럼 median filter 를 포인트 클라우드 데이터에 적용하면 카메라 알고리즘과 다르게 성능이 떨어진다. 200-300 미터 떨어진다면 resolution 이 10분의 1로 떨어진다(degree 와 range 에 대한 데이터)

Lidar point clouds are significantly less dense compared to camera images, particularly at larger distances. As such, the direct application of camera algorithms does typically not achieve the desired result, as exemplified in [4] for a median filter applied to point cloud data. Since conventional lidars have a resolution of a tenths of a degree and a range of two to three hundred meters, the density of the point cloud decreases significantly in the middle and far range.

9. 머신러닝 방법으로 point cloud corruption de-noising 은 2-6m 내의 데이터가 가능 / 메뉴얼로 피처를 뽑아내 KNN 과 SVM 으로 학습함 / feature vector 는 covariance matrix 의 아이겐밸류이고 , 50mm^3 cubic voxel 안에 10개의 포인트보다 많아야 가능하다.

A first machine learning approach for de-noising dense point clouds corrupted by fog with a visibility of 2m and 6m is introduced in [24].By manually extracting features, a k nearest neighbor (kNN) and a support vector machine (SVM) are trained. The feature vector is in particular based on the eigenvalues of the covariance matrix of the Cartesian coordinates, therefore it is only derived if there are more then ten points in a 50mm3 cubic voxel. For a sparse lidar point cloud this assumption is rarely satisfied.

10.

연구 B : spare point cloud denosing

1. d domain 에서 공간에서의 거리적 혹은 확률적 분포를 이용한다, SOR, ROR 필터등이 있다. SOR, ROR 방법은 아래와같음

In the 3D domain many approaches are based on the spatial vicinity or statistical distributions of the point cloud [15], such as the statistical outlier removal (SOR) and radius outlier removal (ROR) filter. The SOR defines the vicinity of a point based on its mean distance to all k neighbors compared with a threshold derived by the global mean distance and standard deviation of all points. The ROR filter directly counts the number of neighbors within the radius r in order to decide whether a point is filtered or not.

2. 위 방법은 [4] 논문에서 denosing task 에 적합하지 않다고 주장함. / DROR 필터가 [4]에서 주장되고 서치하는 반경 r 을 넓혀서 거리가 멀어 less dense 한것을 보안하는 정도(라이다 raw data structrue 고려한것) (상세한건 아래 참고)

Charron et al. [4] have shown that these filter types are not suited for the de-noising task of sparse point clouds corrupted by snow. Thus the enhanced dynamic radius outlier removal (DROR) filter was introduced by [4] which increases the search radius r for neighboring points with increasing distance of the measured point. Since this approach takes the raw data structure of lidar sensors into account, which is less dense at far distances, a better performance could be achieved.

3. 그럼에도 불구하고 이런 방법들은 공간적 접근으로 하나의 점에 대해서 (주변 점들이 없으면) 버리게 된다.(필터된다)

[4] 에서 보면 멀리있는 데이터들은 필터되는게 증가하게 된다(SOR,ROR,DROR). / 그리하여 의미있는 정보, 특히 고속에서, 센서 레인지가 추가적으로 한정되게 된다 필터에 의해.

Nevertheless, these approaches are based on spatial vicinity and consequently discard single reflections without points in the neighborhood. As a result, points at greater distances are increasingly filtered, as shown in [4] for the SOR, ROR and even DROR. Hence, valuable information for an autonomous vehicle, especially at higher speeds, is discarded and the sensor’s range is additionally limited by the filter.

4. 결론으로, sparsity 는 안개나 보슬비 로 생긴 scatter를 filtering 하는데 유효한 특징(valid feature)이 아니다. / 그리고 filter 방법론은 spatial neighborhood 방법만을 사용하여서는 단거리나 장거리에서 실패하는 경향이 더 크다.

In conclusion, we argue that these filter approaches are prone to failure in the near and far range, as only spatial neighborhood is used.In conclusion, we argue that these filter approaches are prone to failure in the near and far range, as only spatial neighborhood is used.

연구 C : semantic segmentation for sparese Point Cloud

1. CNN 에서 필터 접근방법의 장점은 두가지다/ 근본적 데이터 구조를 이해하고, 특성을 보편화 할수있다(어떤 특성이냐 하면 다양한 거리와 지저분한 분포에 대한것) / 더 나아가 이 방법론은 PCD 에 대한 intensity 정보를 포함할수있다.

We propose a filter approach based on a convolutional neural network, which understands the underlying data structure and can generalize its characteristics for various distances and clutter distributions. Furthermore, this approach is able to also incorporate the intensity information of the point cloud.

2. semantic seg 방법은 Lidar PCD 영역에 활용되고있다. 아주 중요한 결과를 보인다[30]~[32]에서./ 메인 장점은 알고리즘이 보편화가 잘 되고, 다른 거리,방향에서 물체를 잘 인식한다

The semantic segmentation task is being further developed by a large scientific community and is already applied to the lidar point cloud domain, showing very promising results [30]–[32]. A major advantage is that the algorithms can generalize very well and thus recognize objects at different distances and orientations.

3. 많은 방법들이 input data layer 와 network structure 에 대한 많은 방법론이 제안되었다. 그중 semantic seather segmentation 에 우리는 활용하고 적용한다. [31] ~ [36] / preprocessing 알고리즘은 컴퓨터 성능에 대해 높은 요구가 있다/ 우리는 2d input layer 방법론에 집중하고, 그 방법론은 통상적으로 bird eye view [33]–[35] 혹은 image projection veiw[31], [32], [36]. 이다.

There are various established approaches for the input data layer and the network structure itself, which we utilize and adapt to the task of semantic weather segmentation [31]–[36]. Since preprocessing algorithms have strong requirements on computation speed, we focus on 2D input layer approaches, which commonly use a birds eye view (BEV) [33]–[35] or an image projection view [31], [32], [36].

4. 최근 소개된 PointPillars [35] 는 feature extraction network를 기반으로 하였고 포인트 틀라우드의 pseudo image out 을 생산한다(포인트 클라우드의 pseudo image out 은 backbone CNN 의 input 으로 사용됨) / kitti object detection challenge 에서 detection performance 와 inference time 에서 뛰어난 성능을 보인다. / 그러나 이 방법론은 아직 pint-wise semantic segmentation 에 적용되진 않았고 object detecion 에서 wide-spread 용도로만 사용되었다. / 그리하여 LiLaNet[31] 의 CNN 구조에서 영감을 받아 2D 접근법을 제안함/

The recently introduced PointPillars by [35] is based on a feature extraction network, generating a pseudo image out of the point cloud which is used as input for a backbone CNN. The approach excels on the KITTI’s object detection challenge [25] in terms of detection performance and inference time. However, the approach has not yet been applied to point-wise semantic segmentation but is in wide-spread use for object detection only. Thus, we propose a 2D approach inspired by the CNN architecture of LiLaNet [31].

METHOD

a. Lidar 2D images

2d 로 데이터를 누른듯 상세한건

State-of-the-art lidar sensors commonly provide raw data in spherical coordinates with the radius r, azimuth angle φ and elevation angle θ, often combined with an estimated intensity or echo pulse width of the backscattered light. The used rotating lidar sensors (Velodyne ’VLP32c’) contain 32 vertically stacked send/receive modules, which are rotating to obtain the 360◦ scan. Similarly to [31] we merge one scan to a cylindrical depth image as a 2D matrix M = (mi, j) ∈ R (n×m) where each row i represents one of the 32 vertically stacked send/receive modules and each column j one of the 1800 segments over the full 360◦ scan with the corresponding azimuth angle φ and timestamp t. As a consequence we obtain the distance matrix D ∈ R (n×m) and intensity image I ∈ R (n×m) .

B. Autolabeling for Noise Caused by Rain or Fog

1. annotation 의 어려움과 ground truth annotation이 필수적이다.

In order to evaluate the quality of a trained classification approach, ground truth annotations are essential. For sparse lidar point clouds, the manual annotation task is very challenging and even more difficult for semantic weather segmentation, where the decision is whether a point is caused by a water droplet or not.

2. 사람이 카메라 데이터 보고 비교하는게 라이다 PCD 로 진행하는것보다 도움됨. / 그러나 물방울 같은것은 카메라로 바로 들어오는게 아니라 특히 장거리에서, 유의미한 날씨 정보에 대한 라벨링이 불가하다. / 그리하여 우리는 제어 가능한 고정된 장면을 사용하였고 사용한 이요는 자동 라벨링 프로시저를 발전시키기 위함이고, 그 방법은 사람의 인지가 필요가 없다.

However, since water droplets cannot be captured directly by passive camera sensors, especially at large distances, this label aid is not available for semantic labeling of weather information. Thus, we utilize the recorded static scenes in controlled environments to develop an automated labeling procedure, which does not involve human perception.

3. lidar 2d images 들을 생성 후 비,안개 상황에서의 데이터와 GT 데이터를 비교함 , 비교할때 D 거리 데이터를 비교함/ 비교할때 여러 프레임에 대한 정보를 누적/ 마이너한 거리 오차(GT data , 악의상황 데이터)는 고려되었다. / 추가로, 찾는 영역에 대한 유효한 거리 제한값은 delta R 로 설정한다. R 값은 35cm 정도 / 그리하면 D 메트릭스를 통해 GT 와 아닌 것들을 구분할수있다.

We stack all f lidar images for each frame k from one sensor in reference conditions to obtain one single point cloud D GT = (d GT i, j,k ) ∈ R (n×m×f) . Subsequently, we compare each distance image D captured during rain or fog with all ground truth images D GT to decide whether a point is labeled as clutter or not.Since the reference measurements are accumulated over several frames, minor measurement inaccuracies of the sensor are already taken into account when comparing the distance images.In addition, a threshold ∆R is added to the search region of valid distances. The threshold value ∆R = ±35 cm was chosen rather high, compared to the specified distance precision of the sensor, in order to minimize the number of false negatives. Hence, the labels are derived mathematically for each distance di, j with the corresponding ground truth vector for this element d GT i, j,k :

4. GT 데이터 라벨링의 질적향상을 위해 recording 을 할때 동일한 사이즈로 분류하여서 날씨 라벨링이 오차가 생기는지 확인했고 그 오차는 미세하다(아래 숫자)

To quantify the error of our ground truth labeling, we applied the label procedure on the reference recording itself. We split the recording during reference for one setup into equally sized parts. The evaluation is done by taking the accumulation of the first split as valid points for labeling the second split and vise versa. As there are no changes in weather conditions, we would expect that all points will be labeled as valid. The evaluation demonstrates a mean per pixel false rate of 0.367±0.053% for both tests.

C. Data Augmentation

1. 날씨가 좋은 상황의 데이터가 접근 가능하다./ 그리하여 semantic weather segmentation 을 하기 위하여, 우리는 [16] 의 비 기반한 안개 모델의 augmentation approach 를 사용함 / 그리하여 ([16]을 사용하여) 우리는 많은 양의 학습데이터를 메뉴얼 라벨링 없이 얻었다. / augmentation algorithm 은 라이다 이미지에 가해졌고 각 개개인의 거리 측정값에 manipulation(조작?) 이 가해지고, occlusion 은 개념적으로 불가능하다 /

State-of-the-art sparse point cloud data sets which are publicly accessible tend to be recorded under favorable weather conditions. To be able to utilize these data sets for semantic weather segmentation, we developed an augmentation approach for rain based on the fog model of [16]. Hence, we obtained a large training data set without requiring manual annotation while providing error-free ground truth. The augmentation algorithm is applied to lidar images to enable manipulations for each individual distance measurement, whereby occlusion is conceptually impossible.

2. 제안한 [16] 모델 기반한 augmentation 은 개개인의 점을 추가할 뿐만 아니라 PCD 의 추가 속성을 변경한다 : 어떤 것이냐 하면 악의 날씨는 viewing range 에 영향을 끼치고, intensity 의 대비를 줄이고, echo pulse 폭을 줄인다.

The proposed augmentation based on the model of [16] does not only add individual points but alters additional attributes of the point cloud: Adverse weather affects viewing range and lowers the contrast of intensity and echo pulse widths, respectively

1) fog model : 라이다 센서의 모델링 자체를 진행했고 [16] 에서, 그리고 본 논문에서 16 과 다른점은 intensities of augmented points 는 로그 평균 분포 LN 을 사용했고, 그것은 근본적 확률 분호 함수라 가정한다. / 본 논문에서는 안개의 intensity 분포를 모델하고 10-100m 안의 안개에 대해 진행하고, 15,33,55 mm/h 의 비에 대해 모델링함./ 이 방법을 [16] 보다 더 선호하는 이유는, 16의 모델 중 augmented intensity 가 오리지날 intensity 의 함수이다.( 이부분이 16의 단점으로 지적하고 자기가 모델을 만든것! , ( ˜I = I · e −β·d )) /

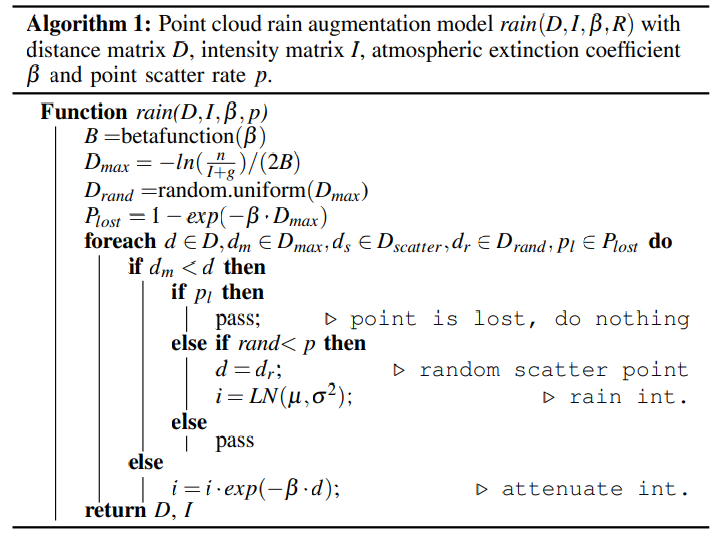

2) rain model : [16] 기반해서 fog augmentation 은 햇고, 본 논문은 더 나아가 rain augmentation 까지 했음 / 그리하여 16에서 사용된 파라미터를 사용해서 natural rainfall 과 scattered points 가 equivalent 하게 16논문이 적용됨 / atmospheric extinction coefficient β (대기 특징 계수) 는 0.01 로 설정하고 for rain augmentation/ p 라는 point scatter arete 는 정의한다 각 포인트 마다의 확률이고 어떤 확률이냐 하면 random scatter point 확률인듯 / 얻은 point-wise GT 데이터는 p 값을 raindrops 에 대해 계산이 가능하다(GT 데이터는 각 포인트마다 라벨리이 되어있으니 p 라는 point-wise의 데이터에 대해 확률(비일 확률) 을 구할수 있는듯) / 그렇게 했을때 p 값은 15,33,55 mm/h 의 강우 상황에서 10% 0.7% 4.7% 가 나온다. / augmentation 을 하기위에 p 를 7.5%로 고정함, cnn 학습 안정에 도움이 되고, 실제 비와 scatter point 양이 match 된다 / 알고리즘 1 이 rain augmentation 에 대한 로직

2) Rain Model: Besides our modifications of the fog augmentation based on [16], we further developed a rain augmentation. Thereby the parameters from [16] are adapted to make the augmented scatter points equivalent to natural rainfall. The atmospheric extinction coefficient β is set to 0.01 for rain augmentation. The point scatter rate p defines the per point probability of random scatter points. The obtained point-wise ground truth data enables the calculation of p for raindrops, which is 10.61%, 0.73% and 4.70% for 15, 33 and 55 mm/h in the climate chamber. For the augmentation we finally fixed p at 7.5%, which stabilizes the CNN training, matches the quantity of scatter points in natural rainfall and is in the range of the derived probabilities from the climate chamber. The rain augmentation is described in

D. Network Architecture

1. lidar images 의 de-noising 을 위해 CNN 아키텍쳐를 적용 / WeatherNet을 제안함, LiLaNet[31] 의 efficient 한 variant(변형) 임 / de-noising task 의 최적화를 위해 network depth 를 줄인다 [31][37]의 13개 class 와 비교하면 제안하는건 3 class 가 있다./ inception layer fig2 를 적용하여 dilated convolution(넓게 퍼진 ?) 을 포함하여 더 많은 정보의 공간적 접근을 제공하고 receptive field 를 늘려 / 더 나아가 dropout layer 는 generalization 의 capabilit 를 늘리기 위해 삽입되었따. / 아래는 최적화 하는 파라미터들

For the de-noising of lidar images we adopt state-of-the-art CNN architectures for semantic segmentation of sparse point clouds. The proposed WeatherNet is an efficient variant of the LiLaNet introduced by [31]. In order to optimize the network for the de-noising task, we reduced the depth of the network given that the complexity of our task (3 classes) is reduced in comparison to full multi-class semantic segmentation (13 classes) [31], [37]. Additionally, we adapted the inception layer (Fig. 2) to include a dilated convolution to provide more information about the spatial vicinity by increasing the receptive field. Further, a dropout layer is inserted to increase the capability of generalization. The resulting network architecture is illustrated in Fig. 3. After optimization on the validation data set, the batch size is set to b = 20, the learning rate to α = 4·10−8 with a learning rate decay of 0.90 after every epoch. Adam solver is used to perform the training, with the suggested default values β1 = 0.9, β2 = 0.999 and ε = 10−8 [38].

IV. DATASET

A. Road Data Set

1. 날씨 conditions 과 manual annotation 은 매우 힘들고 복잡하다 / 31의 좋은 날씨의 데이터를 가지고 data augmentation 을 한다/

Creating a large-scale data set for training, validation and testing for the purpose of weather segmentation is very challenging, due to the fact that weather conditions are very unique and manual annotations are very difficult and complex. In order to re-use data sets, which were recorded under favorable weather conditions, like the data set from [31], we apply the developed data augmentation. Hence, we are able to utilize data sets recorded under favorable weather conditions with various traffic scenarios and roads types for the training of semantic weather segmentation

B. Climate Chamber Scenarios and Ground Truth Labels

1. clear, fog, rain 으로 모든 포인트가 라벨되게 함 / rain, fog 로 포인트 숫자가 없어지거나 하지는 않음 / 그리하여 포인트 클라우드는 정보를 담고있는데 어떤 정보냐 하면 기상적으로 보이는 가시 거리 혹은 rainfall rate 을 담는다 / rainfall rate 을 증가시키는 것은 scatter points 를 증가시키진 않는다, rainfall rate 가 직접적으로 추정되지는 않는다 , 그러나 라이다 센서의 degradation 의 extent(정도) 는 추정 가능하고 이 정보는 매우 자율주행에 의미있는 데이터이다.

In addition, the results indicate that no points are being lost and therefore the sum of fog or rain and valid points is equivalent to the number of points in reference conditions. Thus the point cloud contains the information to estimate the meteorological visibility or rainfall rate by determining the number of weather induced scattering points. As an increase in the rainfall rate does not necessarily results in an increase of scatter points, the rainfall rate cannot be estimated directly, but the extent of the degradation of the lidar sensor can be estimated. This information is incredibly valuable for an autonomous vehicle to adapt behavior to environmental conditions and sensor performance.

C. Data Split

17만개 데이터 학습, 검증, 테스트에 쓰임/ 7만개 chamber , 10만개 길거리 장면 이 데이터로 augmentation 함 / 60%−15%−25% 의 비율로 데이터를 쪼개서 사용했고 fig4cd 는 학습에 4ab 는 validation, test로 잘라서 사용함/ 그리고 overfitting 문제가 생길수 있어 학습데이터를 수평시야에서 약 60도 정도 잘라냄 , 추가로 데이터셋의 교집합 부분은 이미 31번 에서 사용함, 그리고 데이터셋의 교집합 부분을 좋은 날씨의 샘플에 넣어서 diversitty를 증가하고 road recording 을 더하고 동시에 class distribution 의 밸런스를 유지한다. / 그리하여 chamber & road 데이터셋은 10만개와 3만개의 road 샘플이 있고 그데이터는 augmentation 혹은 adverse 날씨가 아님/ 더 나아가 augment 를 통해 17만개로 늘림

In total, the data set contains about 175,941 samples for training, validation and testing containing chamber (72,800) and road (103,141) scenes, which can be used thanks to augmentation. Details about the number of samples and class distributions are stated in Table I. In order to reduce time correlations between samples which were recorded in the climate chamber, each setup is only used in the training (Fig. 4d, 4c), validation (Fig. 4b) or test data split (Fig. 4a). In total we obtain a data split of about (60%−15%−25%) for training, validation and test. To reduce an over-fitting to local dependencies and the scenes in the climate chamber, we cropped the image for training to a forward facing view of about 60◦ in the horizontal field of view. In addition, a subset of the data set, already used in [31], is added as samples in favorable weather conditions to increase the diversity and add road recordings while maintaining a balanced class distribution. Thus, the ’chamber & road’ data set contains 103,878 and 31,078 road samples without augmentation or adverse weather. Further, the amount and diversity of the training data can be increased by a manifold, because the augmentation enables the utilization of large-scale road data set, which results in 103,141 road samples and in 175,941 in total.

V. EXPERIMENTS

1. 이 섹션에서는 여러 방법을 통해 weatehrNet 의 성능향상을 함, 특히 자연스러운 rainfall recorded on road 를 생성하기 위함/ We apply the Intersection-over-Union (IoU) metric for performance evaluation, according to the Cityscapes Benchmark Suite [31], [37]. / 그들의 결과 또한 table 1 에 있음/ weather augmentation 에 대한 영향을 디테일하게 보기 위해, 3가지 차별된 데이터 집합으로 분류하고(without augmentation_) 실험1,2,3으로 나눔/ 모든 evaluations 은 experiment2에서 설정한 데이터로 진행, 그 데이터는 자동라벨이 붙어있고 도로 데이터인데 rain,for,augmentation 이 없는 데이터이다. /table1 에서 말하길 road 데이터와 제안한 weather augmentation 을 사용하면 선응이 증가한다. / 또한 분류된 fog,rain on chamber data 를 validating 하게되면 , raod data 의 usage 와 augmentation 은 성능이 증가한다. / 그것은 의미한다 network 는 구분할수있다 두 도메인에 대해 weather influence 를 구분가능하다/

As described in section III and IV, we obtained a largescale data set recorded on public roads and in a dedicated climate chamber with different types of point-wise annotations. In this Section we describe several approaches to train the proposed WeatherNet in order to maximize the performance and analyze the benefit of weather augmentation, especially for the generalization to natural rainfall recorded on roads. We apply the Intersection-over-Union (IoU) metric for performance evaluation, according to the Cityscapes Benchmark Suite [31], [37]. An overview of all experiments and their results is given in Table I. In order to evaluate the influence of the weather augmentation in detail, we trained the network on three different data subsets with and without augmentation, defined as experiment 1, 2 and 3: 1) Chamber: only chamber data as baseline experiment. 2) Chamber & Road: Climate chamber data set and a subset of road data without any augmentation or adverse weather on roads. 3) Chamber & Road with Augmentation: Climate chamber data set and class balanced road data set without adverse weather, but with augmentation. Note, all evaluations are based on the test data set from experiment 2, which contains autolabeled annotations and road data without fog, rain or augmentation. Table I showsthat the performance is significantly increased by using road data and the proposed weather augmentation. Besides validating the classes fog and rain only on chamber data, the usage of road data and the augmentation leads to an increase in the overall performance and per class IoU. This indicates that the network is able to identify weather influences in both domains and gains a general understanding of the scene.

2. DROR filter의 결과가 나타내는건, 국부적 주변만의 feature 는 dense wat4er drops을 걸러내기에 적합하지 않다. / 제안된 CNN 방법은 DROR 보다 성능이 많이 뛰어남/ DROR 의 파라미터는 [4]로부터 가져옴 / 더 나아가 본문제안방법을 semantic segmentation models RangeNet21, RangeNet53 [32] and LiLaNet [7], 와 비교하였고, 좋은 비교가능한 결과를 보임/ 증명하였다 기본 cnn 기반 weatehr segmentation and denoising 은 가치있고 기하학적 접근보다 성능이 뛰어남/ 추가로 weathernet 은 cnn 보다 성능이 좋고 특히 마지막 experiemtn3에서 훨씬 적은 trainable parameter르 가지고 inference time 을 가진다. 그리하여 preprocessing step 으로 적용 가능하다 / confusion matrics fig.5 에서 말하기를 rain,fog 의 대부분 클래스들은 섞여있고, fog와rain 은 최종적으로 water droplet 으로 구성되어있고 distribution,density , size of water droplets 만 다르고 그것 또한 plausible 하다 / 더 나아가 lidar sensor는 이것들을 다르게 받아들이지 안흔ㄴ다. / point cloud 필터링에서 이것들이 섞여있는 것은 중요하지 않다 : confusion(혼란, rain for 가 섞이는 것을 얘기하는듯함)_은 classifying weather condition 에만 불리함이 있다/ 더 나아가 augmentation 은 rain 과 fog 의 혼란에 significant decrease 를 일으킨다 /

The results of the baseline DROR filter indicates, that the local vicinity alone is not a proper feature to filter scatter points caused by dense water drops. The proposed CNN approach is outperforming DROR by an order of magnitude. The parameters for the DROR are taken from [4], except for the horizontal sensor resolution which is adapted to the utilized ’VLP32C’.Furthermore, we compare our approach to the state-of-theart semantic segmentation models RangeNet21, RangeNet53 [32] and LiLaNet [7], which provide comparable results. Consequently, we prove that the basic idea of CNN-based weather segmentation and de-noising is valuable and surpasses geometrically based approaches. In addition, the proposed optimized WeatherNet is mostly outperforming the other CNNs, especially on the final experiment 3, and has a significantly lower number of trainable parameters and inference time. Thus, the network can be applied as preprocessing step.

The confusion matrices Fig. V show that mostly classes rain and fog are mixed up. Since fog and rain ultimately consist of water droplets and differ only in distribution, density and size of the water droplets, this is plausible. Moreover, lidar sensors are not designed to perceive this difference. For point cloud filtering these mix-ups are not important; a confusion is disadvantageous only with regard to classifying distinct weather conditions. Furthermore the augmentation leads to a significant decrease in confusion between rain and fog.

A. Qualitative Results on Dynamic Chamber Data

challengin dynamic scenes 에 대한 질적 결과가 이 섹션에 있다. / GT data 가 이 dynamic scenes 에 대해 없고(두개의 다른 weather condition) 글피하여 auto-labeling procedure 은 적용 불가하다 / 그럼에도 불구하오 fig.1,7,8 은 필터 결과를 잘 보여준다 /